source code: download

Overview

In this age of data explosion, parallel processing is essential to processing a massive volume of data in timely manner. MapReduce, which has been popularized by Google, is a scalable and fault-tolerant data processing tool that enables to process a massive volume of data in parallel with many low-end computing nodes.

However, it is observed that the detection of the failed worker is delayed, which may result in a significant increase in the completion time of jobs with different workload. Thus, we propose two mechanisms to achieve the goal of fast failure detection in Hadoop via heartbeat. They are Adaptive interval and Reputation-based Detector. As the experiments show, the Adaptive interval is advantageous to the small jobs while the Reputation-based Detector can handle well with large jobs.

System Architecture

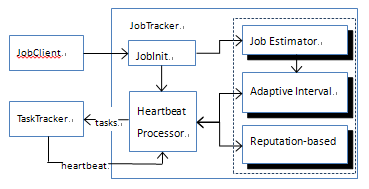

Figure 1 shows Architecture Design of the Adaptive Failure Detction

Figure 1 shows Architecture Design of the Adaptive Failure Detction

Figure 1 gives the overall Architecture Design of the Adaptive Failure Detection

- JobClient first receives a request from users, and then submits this request to JobTracker.

- JobInit prepares the necessary data for the job, including the data locations, executing jars and etc.

- After initializing the job, general information about the job flows into the Job Estimator, which will analyze this information to estimate the execution time of the job.

- Then, the Adaptive interval will use the estimated time to calculate the new adaptive expiry time for each job and configure it into expiry thread and the heartbeat interval at the runtime. The detection method for the Adaptive interval is exactly same as the original Hadoop but differs in its expiry time interval.

- At the same time, the Reputation-based Detector is collecting the fetch-errors extracted from the heartbeat and evaluating the reputation for each TaskTracker. If the reputation of one TaskTracker is lower than the lowest bound, JobTracker will mark that TaskTracker as a failed TaskTracker.

Adaptive interval

We observed that the expiry time to each TaskTracker in Hadoop is 10 minutes by default, which is too fixed and static. Therefore, the design goal of the adaptive interval is to enable Hadoop to dynamically configure its expiry interval which is adaptive to the job size. We first estimate the job execution time according the job input size, after which we change the expiry time according to the estimating value.

- Job Estimator

- Adaptive Expiry Interval

Hadoop jobs have three major phases: map, shuffle and reduce. The estimating execution time (EET) should consist of three components: time to process map data, time to shuffle the data and time to process data in the reduce phase.

If the estimating time is less than the 10 minutes, we use the EET and configure as the expiry interval. Otherwise, we keep the 10 minutes as the expiry interval.

After choosing the expiry interval time, the expiry thread in Heartbeat Processor will use this value to monitor the entire cluster. If JobTracker has not received a heartbeat from one worker within that interval, JobTracker will consider that worker as a failed worker.

Reputation-based Detector

In the execution flow of MapReduce, Reduce task need to fetch the data from the remote map worker. Therefore, if the reduce task cannot fetch the data from the map worker, the map worker is possibly running at an unusual state. The Reputation-Based Detector aims to evaluate the reputation of each worker based on the fetch-errors information monitored by JobTracker. Specifically, The JobTracker will decrease the reputation of the corresponding worker if there are fetch-errors reporting on it. When the reputation of one particular worker is less than a threshold value, the JobTracker will believe that worker as a failed worker.

By referring to the fetch-errors, we call them gossips later on because some of the suspect information is true while others may be not. It is interesting to note that the sematic information contained in these gossips is extremely different. We explore the temporal and spatial characteristics among these gossips.

- Temporal: The gossips tend to be gathered within a short period of time. The more gossips received within that time, the more penalties will be given to that TaskTracker.

- Spatial: The more different workers involved to report, the more JobTracker is convinced that the particular TaskTracker has a failure.

However, how can a worker gain its reputation? A worker can gain its reputation via heartbeat as well. Each time when JobTracker receives a heartbeat, the worker sending this heartbeat will gain an increase on its reputation.

Experiment Results

Figure 2. Average Execution Time of different detection mechnisam with one node failure

Figure 2. Average Execution Time of different detection mechnisam with one node failure

As Figure 2 shows, the native Hadoop can finish the sort and word count programs within 30 seconds and 2.22 minutes respectively, if there are no failures. We inject the worker failure by simply shutdown the TaskTracker service of the worker manually.

While under one worker failure, it is manifest that the performance of the native Hadoop siginificantly decreases since the execution time for the both programs dramtically rises, which are 13 and 14 minutes respectively. The reason for this is that the native Hadoop uses the fixed expiry interval 10 minutes for each worker, which results in the delayed detection of the failed worker.

As for the performance of the Adaptive Interval. From Figure 2, the finish time for both programs is relatively longer than the time without any node failures, but is significantly shorter than the time when there are failures of the native Hadoop. It shows that it has 90% and 80% improvement in execution time for sort and word count respectively.

As for the performance of the Reputation-based Detector. According to the Figure 5, the result for the program sort is slightly shorter than the native Hadoop, but not as good as the result for the program word count, which has achieved 82% execution time decreasing than the native Hadoop, approximately 2.57 minutes.

Publications

Hao Zhu, Haopeng Chen, Adaptive failure detection via heartbeat under Hadoop, 2011 IEEE Asia-Pacific Services Computing Conference, Pages:231-238, Jeju, Korea, 2011.12.12-2011.12.15, ISBN : 978-0-7695-4624-7