Zhang Can, Chen Haopeng, Gao ShuoTao

Overview

We present ALARM, an autonomic load-aware resource management algorithm that can be used to manage physical machines or virtual machines in cloud, which participate in a P2P key-value store. A lot of existing key-value stores claim that they are elastic enough to scale up or down with no downtime or interruption to applications. However, the question that when the scaling up or down should take place has still not been resolved. The situation may get worse if the data store consists of hundreds of machines, for it's unrealistic for a system administrator to monitor the system and add/remove a machine manually. Fortunately, cloud computing and virtualization technology have enabled the real-time provision of virtual machines and a way of managing virtual machines without human interference. By supervising the utilization of multiple resources (CPU, memory, network IO, etc.) in virtual machines hosting the data store, our ALARM algorithm will take effect when some of the machines become overloaded or underloaded. The experiment result shows that ALARM helps the Open Chord data store, an open-source implementation of the Chord protocol, scale up and down according to the resource usage in the virtual machines.

Basic Model

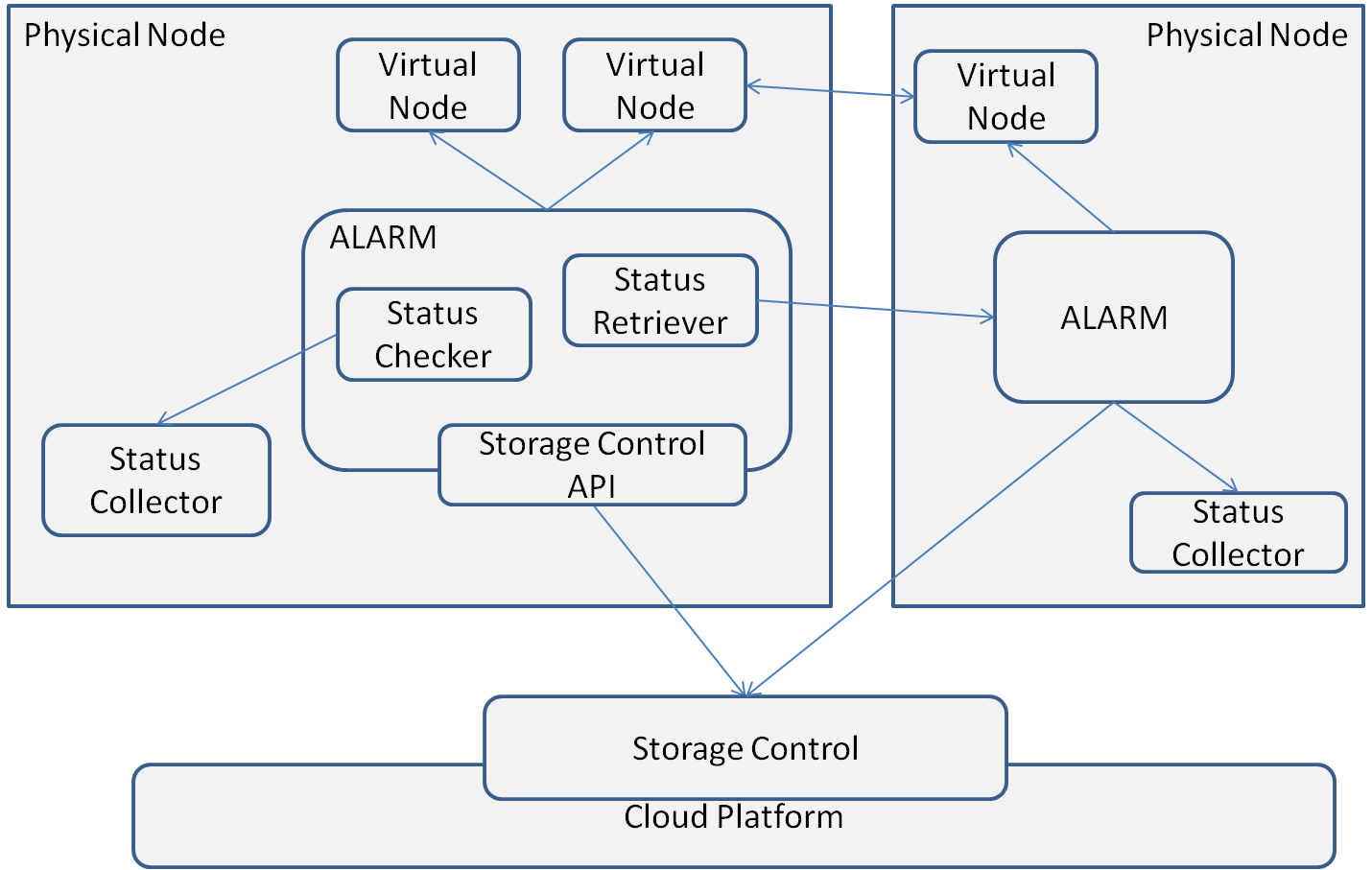

Fig.1 shows the basic resource-management architecture for P2P key-value data stores. As is shown in Fig.1, there are two types of nodes in our architecture, namely virtual node and physical node. Typically, data stored in a P2P data storage are in a distributed manner, and the computer which is responsible for storing part of the data is known as a node. However, in our architecture, these nodes participating in the P2P data storage are defined as virtual nodes, and several virtual nodes can be hosted on a physical node (e.g., a computer or a virtual machine in cloud).

Fig.1. Basic Resource Management Architecture for P2P data stores (view original size)

Fig.1. Basic Resource Management Architecture for P2P data stores (view original size)

Generally speaking, our resource management algorithm is designed to achieve a better utilization of multi-resources on physical nodes by transferring or splitting virtual nodes. The resource-management algorithm is composed of four operations: target, merge, split and data movement. The procedure works as follows:

- target: our algorithm checks periodically whether a physical node should be targeted. A physical node will become targeted because it's either underloaded or overloaded;

- merge/split: for an targeted underloaded physical node, the resource management algorithm will try to merge another underloaded node with it; for an targeted overloaded node, part of the data will be moved to a newly started physical node;

- data movement: finally, a data movement operation will be performed.

System Design

The resource management architecture is composed of four components: the Storage Control module, the Status Collector module, the Virtual Node, and the core ALARM module (Fig.2). First, the Storage Control module is responsible for maintaining a list of available physical nodes in the data store, and interacting with the Cloud platform to start/stop a physical node(virtual machine). Second, the Status Collector module collects the resource utilization on the local machine periodically (e.g. 20 seconds). Also, it stores the history data in a local lightweight database. Third, the Virtual Node module is responsible for data storage. The entire data in the data store are partitioned into fragments, each of which is hosted by one Virtual Node. Data operations such as insert/retrieve/update/delete are handled by the virtual node. Forth, the ALARM module is the controller in a physical node. It is responsible for handling resource adjustment, invoking operations like target, split, merge. In addition, it maintains information about the virtual nodes within the physical nodes.

Fig.2. ALARM System Architecture (view original size)

Fig.2. ALARM System Architecture (view original size)

Experiment

- Experiment Scenario

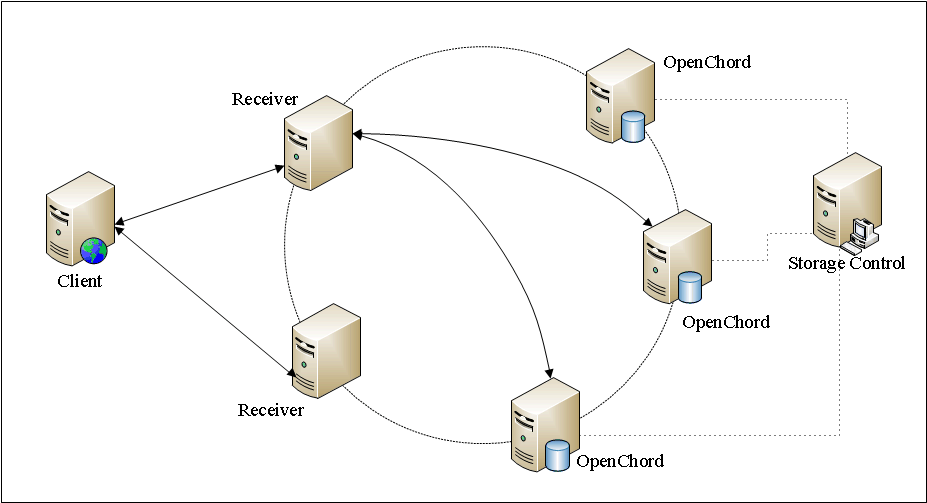

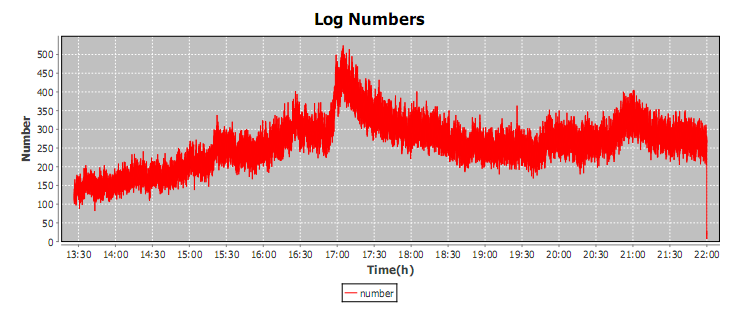

Fig.3 shows the basic architecture of the experiment scenario. In each experiment, seven virtual machines, created from template “Openchord”, serve as physical nodes, which host virtual nodes in the Open Chord data store. And three virtual machines, created from template “Receiver” , serve as request receiver servers. Another ten virtual machines, created from template “Client” , are used as clients to send requests, simulating the user queries according to the 1998 World Cup Web site Access Logs [1]. We treat every url on the website as an data entry in the data store. With Chord protocol, these urls are distributed on different virtual nodes. And then, the clients' requests are sent to the request receivers and dispatched to the appropriate virtual nodes in the Open Chord data store. In addition, the 1998 World Cup Web site Access Logs record the user requests to the web site from May 1th to July 26th, and we choose the logs on June 28th as the experimental data. The number of client requests per second is shown in Fig.4.

Fig.3. Experiment scenario (view original size)

Fig.3. Experiment scenario (view original size)

Fig.4. Number of requests (view original size)

Fig.4. Number of requests (view original size)

- Experimental Results

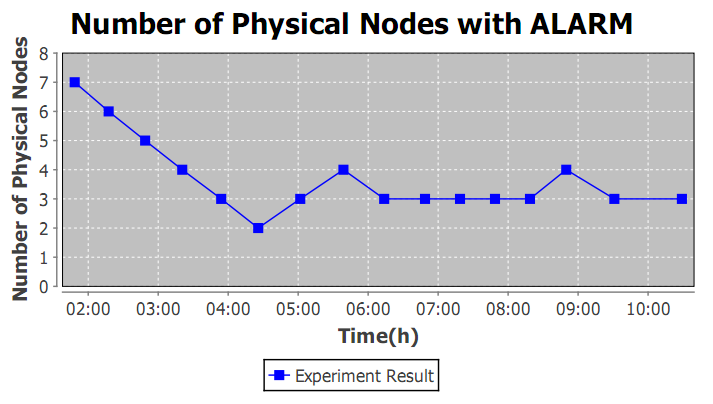

Fig.5 shows the number of physical nodes (virtual machines) in the Open Chord with our ALARM resource management algorithm. The result shows that during the first two and a half hours, because of a relatively light load in the system, the number of virtual machines declined to only 2. However, as the peak of client requests came at about 5:30, one node was added and the number of physical nodes increased to 4. But it dropped back to 3 after the peak passed. The number of physical nodes had not changed until another peak of requests came at about 8:30. As a result, we come to the conclusion that the scale of the system is accordant with the load of the system, which demonstrates the effectiveness of our ALARM algorithm.

Fig.5. Number of physical nodes (view original size)

Fig.5. Number of physical nodes (view original size)

Acknowledgement

We thank the anonymous reviewers of our ALARM paper for their insightful feedback, the SJTU REINS research group and Zheng Qing for useful discussions and paper proof-reading.

Publication

Can Zhang, Hao-peng Chen, Shuo-tao Gao, ALARM: Autonomic Load-Aware Resource Management for P2P Key-value Stores in Cloud, 2011 Ninth IEEE International Conference on Dependable, Autonomic and Secure Computing, Sydney, Pages:404-410, Australia, 2011.12.12-2011.12.14, ISBN: 978-0-7695-4612-4/11

Reference

[1] M. Arlitt and T. Jin, “1998 world cup web site access logs,” 1998. [Online]. Available: http://www.acm.org/sigcomm/ITA/